AI Text Classifier for Content Moderation

Equip your Trust and Safety team with proactive text classification solutions for online sexual harms against children. Built by experts in child safety technology.

Detect Novel CSAM & Text-Based CSE

Safer Predict, built by Thorn, is a proactive text classification solutions for child sexual abuse material (CSAM) and child sexual exploitation (CSE) detection. Powered by innovative tech, trusted training data, and issue expertise.

Safer Predict empowers trust and safety teams to:

-

Cast a wider net for detection of potential CSAM and CSE in lines of text and conversations

-

Advanced predictive AI models to identify potential text-based harms, including discussions of sextortion, self-generated CSAM, and potential offline exploitation

-

Scale content moderation capabilities efficiently using machine learning classification models

-

Self-hosted or Thorn-hosted deployment options to suit your needs

Let's chat about putting Safer to work for your platform.

Get in touch.

The world’s most innovative companies protect their platforms using Safer

Protect your platform and your users with our AI text classifier

Harness Predictive AI

Leverage text classification models to find and score messages and conversation that may lead to sexual harms against children.

Protect Your Users

Semi-automate content moderation by using pertinent results to decrease visibility of potentially harmful content while waiting for manual review.

Empower Moderators

Conduct more in-depth investigations of related, pertinent results, resulting in more comprehensive, priority reports to reporting agencies.

"Safer Predict’s text classifier significantly improves our ability to prioritize and escalate high-risk content and accounts. The multiple labels and risk scores help our team focus on problem accounts, some of which we had been suspicious about but lacked actionable evidence before we deployed the classifier. Thorn’s expertise is evident in Safer’s ability to detect conversations that could lead to sexual harms against children."

Niles Livingston, Child Safety Manager, MediaLab

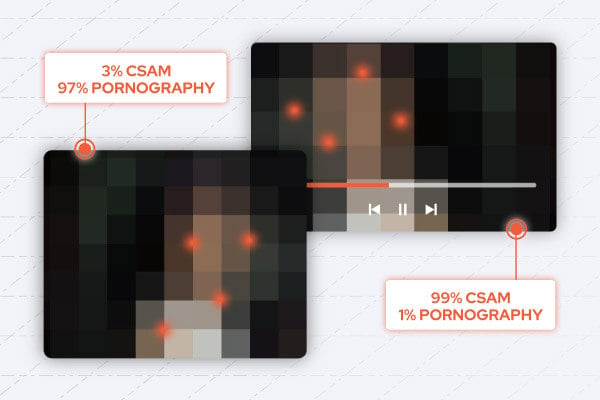

Zero In On High-Risk Areas

Detect conversations that could indicate or lead to CSE using Safer’s new machine learning text classification models. Expose high-risk areas by stacking multiple labels that include: Sextortion, self-generated, child access, has minor, CSAM, and more.

Safer Predict provides highly customizable workflows that allow you to develop a strategic detection plan, hone in on high-risk accounts, and expand child sexual abuse detection coverage. Safer Predict enables you to effectively prioritize and escalate content by setting a precision level and leveraging the provided label for pertinent content.

Beneficial use cases include:

-

Scan all messages involving an account that has previously shared harmful content or been reported for community guideline violations.

-

Scan accounts that have engaged with violators (e.g. viewed the shared content or communicated with a violator).

-

Scan high-risk accounts (e.g. free or anonymous accounts).

Scan high-risk accounts (e.g. free or anonymous accounts). -

Enable content moderators to conduct more in-depth investigations of related, pertinent results, resulting in more comprehensive, priority reports for reporting agencies.

-

Use multiple labels to quickly find and report actionable content, clearing out content moderator queues more efficiently.

-

Semi-automate content moderation by using pertinent results to decrease visibility of harmful content while waiting for manual review.

-

Create custom content moderation workflows based on platform policies.

-

Allocate the technical resources as your team sees fit.

If you have messaging capabilities, chances are you have an urgent need to address text-based harms at scale.

Online enticement is on the rise.

In 2023, NCMEC’s CyberTipline received 104+ million fi les of potential CSAM from electronic service providers alone, and reports of online enticement increased more than 300% from 2021 to 2023. Safer Predict can help you proactively detect this content on your platform.

File sharing, user-generated content, and text messaging are foundational to the internet as it exists today. Anyone can upload and share content widely or send a private message to a child.

An unintended consequence is how easy the internet has made it for bad actors to use technology to exploit children or share child sexual abuse material within communities that can flourish on the same platforms we all use every day.

Content moderation is complex.

Scanning for, identifying and enforcing policies related to this harmful content falls to content moderators. And, given the scale of this issue, it's safe to assume they are overwhelmed.

Trust & Safety teams juggle many priorities. Enforcing community guidelines while upholding your privacy policy. Scanning for harmful content while protecting user data. With Safer, you don't have to choose one or the other.

Safer can help you protect your platform with privacy in mind. Our text classification technology empowers you to be proactive in identification while providing a secure and flexible solution that puts you in control of how it integrates into your infrastructure and content moderation workflow.

Text classifiers built by experts in child safety technology.

We're on a mission to create a safer internet for children, beginning with the elimination of online sexual harms against children and targeting every platform with an upload button or messaging capabilities. Safer was created with the intention of transforming the internet by finding and removing child sex abuse material, defending against revictimization, and diminishing the viral spread of new material.

With a relentless focus on content moderation that leverages advanced AI/ML models, proprietary research, and trusted training data, Safer enables digital platforms to come together and protect children online.